Order matters here. jPCT culls away back faces (unless you disable this with setCullling(), but the lighting will be wrong in this case). What is a back face and what isn't is defined by the order of the vertices in a triangle. In this case, reverse the order of the vertices for the faces that aren't visible and they should show up.

- Welcome to www.jpct.net.

This section allows you to view all posts made by this member. Note that you can only see posts made in areas you currently have access to.

#46

Support / Re: How does JPCT-AE Vertex works on Object3D.addTriangle(...)?

November 26, 2022, 09:31:27 PM #47

Support / Re: Bug with diffuse lighting

November 11, 2022, 11:38:10 AM

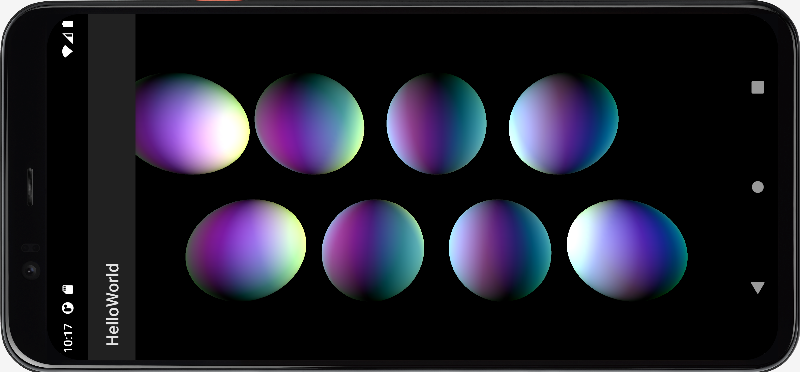

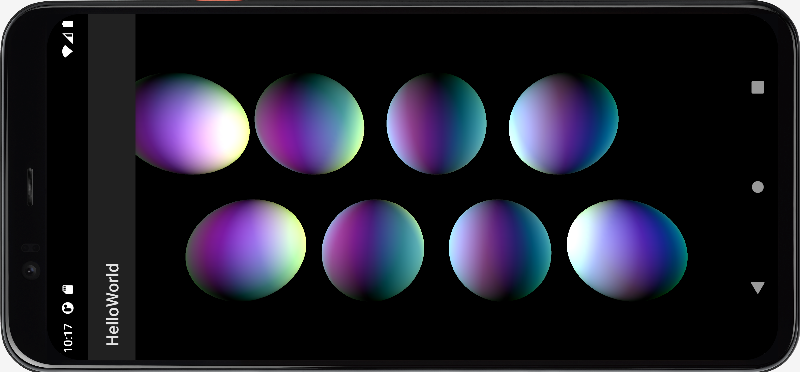

I made this test case, but I fail to see an issue with it:

Do you have a test case that shows this problem?

Code Select

package com.threed.jpct.example;

import android.app.Activity;

import android.opengl.GLSurfaceView;

import android.os.Bundle;

import com.threed.jpct.Camera;

import com.threed.jpct.FrameBuffer;

import com.threed.jpct.Light;

import com.threed.jpct.Object3D;

import com.threed.jpct.Primitives;

import com.threed.jpct.RGBColor;

import com.threed.jpct.SimpleVector;

import com.threed.jpct.World;

import java.util.ArrayList;

import java.util.List;

import javax.microedition.khronos.egl.EGLConfig;

import javax.microedition.khronos.opengles.GL10;

public class HelloWorld extends Activity {

private GLSurfaceView mGLView;

private MyRenderer renderer = null;

private FrameBuffer fb = null;

private World world = null;

private final List<Light> lights = new ArrayList<>();

private float[] dir = null;

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

mGLView = new GLSurfaceView(getApplication());

mGLView.setEGLContextClientVersion(2);

mGLView.setPreserveEGLContextOnPause(true);

renderer = new MyRenderer();

mGLView.setRenderer(renderer);

setContentView(mGLView);

}

@Override

protected void onPause() {

super.onPause();

mGLView.onPause();

}

@Override

protected void onResume() {

super.onResume();

mGLView.onResume();

}

@Override

protected void onStop() {

super.onStop();

System.exit(0);

}

class MyRenderer implements GLSurfaceView.Renderer {

public MyRenderer() {

//

}

public void onSurfaceChanged(GL10 gl, int w, int h) {

world = new World();

world.setAmbientLight(0, 0, 0);

SimpleVector[] cols = new SimpleVector[]{

SimpleVector.create(64, 128, 64),

SimpleVector.create(0, 128, 128),

SimpleVector.create(128, 0, 128),

SimpleVector.create(128, 128, 0),

SimpleVector.create(0, 0, 128),

SimpleVector.create(128, 0, 0),

SimpleVector.create(0, 128, 0),

SimpleVector.create(0, 64, 64)};

for (int i = 0; i < 8; i++) {

Object3D obj = Primitives.getSphere(50, 8);

world.addObject(obj);

int pos = 30 + (i - 7) * 10;

obj.translate(10 - 20 * (i % 2), pos, 0);

obj.build();

}

for (int i = 0; i < 8; i++) {

int pos = 30 + (i - 7) * 30;

Light light = new Light(world);

light.setIntensity(cols[i]);

SimpleVector sv = new SimpleVector();

sv.y = pos;

sv.z -= 20 - 40 * (i % 2);

light.setPosition(sv);

lights.add(light);

}

dir = new float[lights.size()];

for (int i = 0; i < lights.size(); i++) {

dir[i] = 1f;

}

Camera cam = world.getCamera();

cam.moveCamera(Camera.CAMERA_MOVEOUT, 45);

cam.lookAt(SimpleVector.ORIGIN);

fb = new FrameBuffer(w, h);

}

public void onSurfaceCreated(GL10 gl, EGLConfig config) {

//

}

public void onDrawFrame(GL10 gl) {

fb.clear(RGBColor.BLACK);

world.renderScene(fb);

world.draw(fb);

fb.display();

for (int i = 0; i < lights.size(); i++) {

Light light = lights.get(i);

SimpleVector sv = light.getPosition();

sv.y += dir[i];

if (sv.y > 150 || sv.y < -150) {

dir[i] *= -1f;

}

light.setPosition(sv);

}

}

}

}

Do you have a test case that shows this problem?

#49

News / Re: Maintainability

November 09, 2022, 08:26:02 AM

As Aero said, it's not a problem. The stuff in the util-package is decoupled from the engine, everything that is done in there can be done with the means provided by the public interfaces.

#50

News / Re: Maintainability

November 08, 2022, 12:20:06 PM

The library is still maintained, but no new major features will be added to it in the foreseeable future. Therefor, releases contain mainly bug fixes and after all these years, there aren't many bugs to fix anymore. That's the update frequency decreased.

#52

Support / Re: 3Dto2D

August 09, 2022, 08:57:24 AM

Sorry for not replying sooner, I was on holiday. But then again, I don't have much to add to what AeroShark333 already said. It highly depends on your application how to obtain the point in 3D.

#53

Support / Re: How to add more texture maps to obj

June 09, 2022, 08:02:35 AM

That looks a bit dark and actually as if it exploded somewhat. While loading, the Loader reports polygons with more than 4 points. The Loader doesn't support these and while this isn't the reason for texturing issues, it might be the reason for the destroyed look. Can you export the mesh with 3-4 points per polygon instead?

#54

Support / Re: How to add more texture maps to obj

June 08, 2022, 09:21:16 AM

Ok, but how does the rest look like? Blank? Textured with that one texture? Not present at all? A screen shot might be really helpful.

#55

Support / Re: How to add more texture maps to obj

June 07, 2022, 05:06:57 PM

Oh, and please wrap larger chunks of text or code into code-Tags (the # in the forum's editor). I did that for your former posts.

#56

Support / Re: How to add more texture maps to obj

June 07, 2022, 05:05:47 PM

I had brief try on loading (but not rendering) the object and I can't see anything wrong with it. This is an excerpt from the log output:

Prior to loading the model, I assigned two textures to the manager:

Note that these textures don't show up in the log, only the ones that have no matching names in the TextureManager do. As all working as expected.

I'm not sure, what your actual issue is...you loaded the textures and the model but the textures don't show on the model? If that's the case, maybe you can post a screen shot of how the model looks instead?

Code Select

2022-06-07 16:56:12.146 6204-6234/com.example.androidtestcase I/jPCT-AE: Text file from InputStream loaded...2796 bytes

2022-06-07 16:56:12.147 6204-6234/com.example.androidtestcase I/jPCT-AE: Processing new material FrontColor!

2022-06-07 16:56:12.147 6204-6234/com.example.androidtestcase I/jPCT-AE: Processing new material Color_E04!

2022-06-07 16:56:12.147 6204-6234/com.example.androidtestcase I/jPCT-AE: Processing new material Color_E03!

2022-06-07 16:56:12.147 6204-6234/com.example.androidtestcase I/jPCT-AE: Processing new material Color_007!

2022-06-07 16:56:12.147 6204-6234/com.example.androidtestcase I/jPCT-AE: Processing new material Carpet_Loop_Pattern!

2022-06-07 16:56:12.147 6204-6234/com.example.androidtestcase I/jPCT-AE: Processing new material _Wood__Floor_2!

2022-06-07 16:56:12.148 6204-6234/com.example.androidtestcase I/jPCT-AE: Processing new material Stone_Pavers_Flagstone_Gray!

2022-06-07 16:56:12.148 6204-6234/com.example.androidtestcase I/jPCT-AE: Texture named 02/Stone_Pavers_Flagstone_Gray.jpg added to TextureManager!

2022-06-07 16:56:12.148 6204-6234/com.example.androidtestcase I/jPCT-AE: Processing new material Wood__Floor!

2022-06-07 16:56:12.148 6204-6234/com.example.androidtestcase I/jPCT-AE: Texture named 02/Wood__Floor.jpg added to TextureManager!

2022-06-07 16:56:12.148 6204-6234/com.example.androidtestcase I/jPCT-AE: Processing new material Stone_Coursed_Rough!

2022-06-07 16:56:12.148 6204-6234/com.example.androidtestcase I/jPCT-AE: Texture named 02/Stone_Coursed_Rough.jpg added to TextureManager!

2022-06-07 16:56:12.148 6204-6234/com.example.androidtestcase I/jPCT-AE: Processing new material Groundcover_RiverRock_4inch!

Prior to loading the model, I assigned two textures to the manager:

Code Select

TextureManager.getInstance().addTexture("02/Carpet_Loop_Pattern.jpg", texture);

TextureManager.getInstance().addTexture("02/_Wood__Floor_2.jpg", texture2);

Note that these textures don't show up in the log, only the ones that have no matching names in the TextureManager do. As all working as expected.

I'm not sure, what your actual issue is...you loaded the textures and the model but the textures don't show on the model? If that's the case, maybe you can post a screen shot of how the model looks instead?

#57

Support / Re: How to add more texture maps to obj

June 05, 2022, 04:02:00 PM

Can't you upload it to some file host? Or just mail a zipped version to info@jpct.net

#58

Support / Re: How to add more texture maps to obj

June 04, 2022, 07:00:18 PM

Are these materials really used in the obj file? Maybe the export went wrong?

#59

Support / Re: How to add more texture maps to obj

June 04, 2022, 06:30:54 PM

That looks a bit strange....I expected some texture names to appear in the log. Can you upload the file so that I can have a look at it (next week...)?

#60

Support / Re: How to add more texture maps to obj

June 04, 2022, 05:40:26 PM

It should work that way. Have a look at the log output when loading the file. You should get log messages if a texture name can't be found and you should be able to see what exactly these names are.